Tag production

Post details

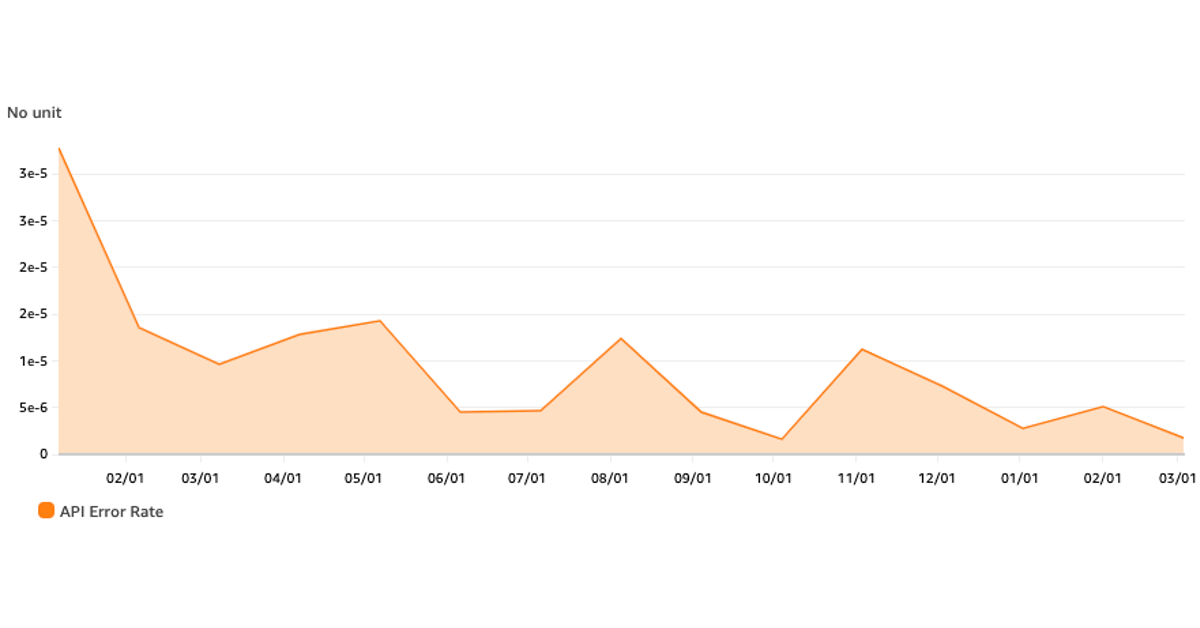

How one process helped us decrease our error rate 17x in one year.

Post details

GitHub recently experienced several availability incidents, both long running and shorter duration. We have since mitigated these incidents and all systems are now operating normally. Read on for more details about what caused these incidents and what we’re doing to mitigate in the future.

Lessons learned from modernising a lesser maintained (Spring Boot) service (16 mins read).

What I learned from taking ownership of a lesser maintained service and bringing it up to a better standard.

Post details

When you experience your first production outage

Molly Struve 🦄 (@molly_struve)Thu, 14 Apr 2022 19:00 +0000

Post details

Hot take: Anyone dunking on Atlassian about the fact that an outage like this could happen should not be trusted near production environments because they're either lying or don't have the experience to know what they're talking about.

Mark Imbriaco (@markimbriaco)Fri, 15 Apr 2022 13:00 +0000

Post details

It was MySQL, with the resource contention, in the database cluster

Post details

This week we’re joined by Nora Jones, founder and CEO at Jeli where they help teams gain insight and learnings from incidents. Back in December Nora shared here thoughts in a Changelog post titled “Incident” shouldn’t be a four-letter word - which got a lot of attention from our readers. Today we’re talking with Nora a...

Post details

oh you have a weighted blanket? haha that's cute, I sleep with the crushing weight of all the shitty code I wrote years ago still running in production

christina (@cszhu)Wed, 05 Jan 2022 17:49 GMT

Post details

Fully expecting to learn that all the recent high-profile outages were due in part to engineer fatigue Your fave cloud / saas providers are run by humans, and they are very, very burnt outmx claws (@alicegoldfuss)Fri, 17 Dec 2021 20:11 GMT

Post details

sorry, really disagree that it’s just an aesthetic. cynical tweets about other companies do great on twitter, so hugops is a less trendy but more empathetic response from a specific part of the field recognizing job complexities; a _more_ advanced conversation IMO.

Kara Sowles (@FeyNudibranch)Mon, 29 Nov 2021 02:48 GMT

Post details

I mean, I’ve broken systems used by millions, it can feel so lonely and hopeless, I’d honestly be happy to hear folks out there were on my side. Maybe that’s just me.

Wade Armstrong (@kaizendad)Sun, 28 Nov 2021 21:54 GMT

Post details

Alexa, show me someone who’s never been responsible for a production workload.

Post details

“hugops” is just a way to tweet about a company’s outage without looking like a jerk, but y’all aren’t ready for that conversation

Sam Kottler (@samkottler)Sun, 28 Nov 2021 15:40 GMT

Tim Banks stands 5 feet, 8 inches (@elchefe)Mon, 29 Nov 2021 07:20 GMT

Post details

I mean, yes? That is the point of it? We enjoy dissecting outages and hugops is to indicate that we still sympathize with those fighting the fire even as we speculate about it.

Laurie Voss (@seldo)Sun, 28 Nov 2021 23:30 GMT

Post details

i’ll say hugops is dope in comparison to getting death threats for the online game you work on being down

ryan kitchens (@this_hits_home)Sun, 28 Nov 2021 20:49 GMT

Use (End-to-End) Tracing or Correlation IDs (4 mins read).

Why you should be requesting, and logging, a unique identifier per request for better supportability.

Post details

I hadn't realized how much I missed prod debugging after working on a non-production codebase for the past few months. It's sort of exhilarating! (Yes, I'm a weirdo)Laurie (@laurieontech)Tue, 16 Nov 2021 19:31 GMT

Post details

Same with automated testing prior to deployment. This is a good thing though. Those hard problems were always there, you just never had time to look for them due to all the simpler issues taking up all your time.Post details

it's a bit counterintuitive, but the better-instrumented and the more mature your systems are, the fewer problems you'll find with automated alerting and the more you'll have to find by sifting around in production by hand. twitter.com/arclight/statu…Charity Majors (@mipsytipsy)Tue, 09 Nov 2021 22:13 GMT

Tom Binns (@fullstacktester)Wed, 10 Nov 2021 16:08 GMT

Post details

it's a bit counterintuitive, but the better-instrumented and the more mature your systems are, the fewer problems you'll find with automated alerting and the more you'll have to find by sifting around in production by hand.

Post details

I'm reminded of Clifford Stoll's "The Cuckoo's Egg" where he detected severe security vulnerabilities based on a monthly $0.25 accounting discrepancy that had no good explanation. Systems weren't falling over, nothing was tripping an alert but the system was subtly off-normal

arclight (@arclight)Fri, 29 Oct 2021 09:12 +0000

Charity Majors (@mipsytipsy)Tue, 09 Nov 2021 22:13 GMT

Post details

I organized a department-wide storytime titled “That Time I Broke Prod” and I think it may have been my favorite hour that I ever spent with colleagues. Normalize talking about failure, and remember that your manager (me) has fucked up way worse than you ever will 🥰

Denise Yu 💉💉 (@deniseyu21)Sat, 16 Oct 2021 22:10 +0000

Post details

unplanned learning opportunities!

Post details

Ian "Snark Vader" Smith (@metaforgotten)Tue, 07 Sep 2021 19:10 +0000

Liz Fong-Jones (方禮真) (@lizthegrey)Tue, 07 Sep 2021 19:12 +0000

Post details

A root cause you never hear: X, who had experience with the codebase, left the company, and so they weren't here to notice the issue and prevent it from metastasizing into a full-blown incident.Lorin Hochstein (@norootcause)Wed, 01 Sep 2021 05:10 +0000

Post details

I'm starting to see incidents as essential for knowledge sharing. If you're not experiencing any, it then makes sense to periodically introduce controlled incidents to learn about your infrastructure and how it behaves. Note: Hardly an original thought/realisation.Lou ☁️ 👨💻🏋️♂️🎸🚴🏻♂️🏍 (@loujaybee)Mon, 02 Aug 2021 12:41 +0000

Post details

Everyone should be on-call because everyone should share the load The load exists because companies don't invest in proper infrastructure and ample headcount Yet another way this industry will grind you to dustPost details

principal engineers should be on call there - i said itkris nóva (@krisnova)Sat, 10 Jul 2021 18:46 +0000

bletchley punk (@alicegoldfuss)Sun, 11 Jul 2021 20:34 +0000

Post details

Quickest way to see the root cause of an outage is to check the #hugops hashtag.Terence Eden (@edent)Tue, 08 Jun 2021 10:06 +0000

Post details

Thanks to the poor engineer at @cuvva for making my day 😂Tim Dobson (@tdobson)Thu, 03 Jun 2021 09:47 +0000

Post details

In general, staying calm during an incident is a superpower. It’s like the difference between how you code normally and how you code during an interview. It’s also a skill that you can learn over time. Even if you’re an anxious person, you can get better at it.Lorin Hochstein (@norootcause)Sat, 22 May 2021 22:21 +0000

Post details

Forgetting about the change window... again

shenetworks (@SerenaTalksTech)Tue, 16 Mar 2021 03:00 GMT

Post details

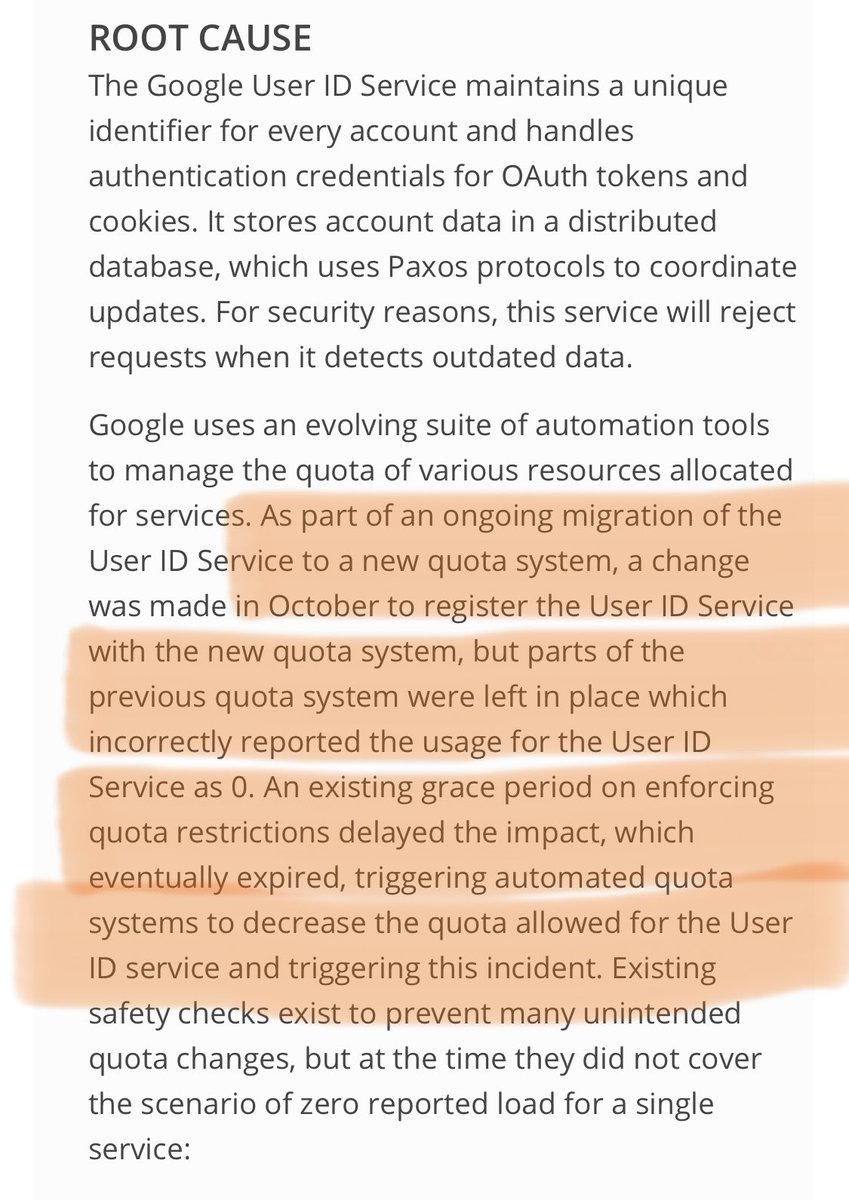

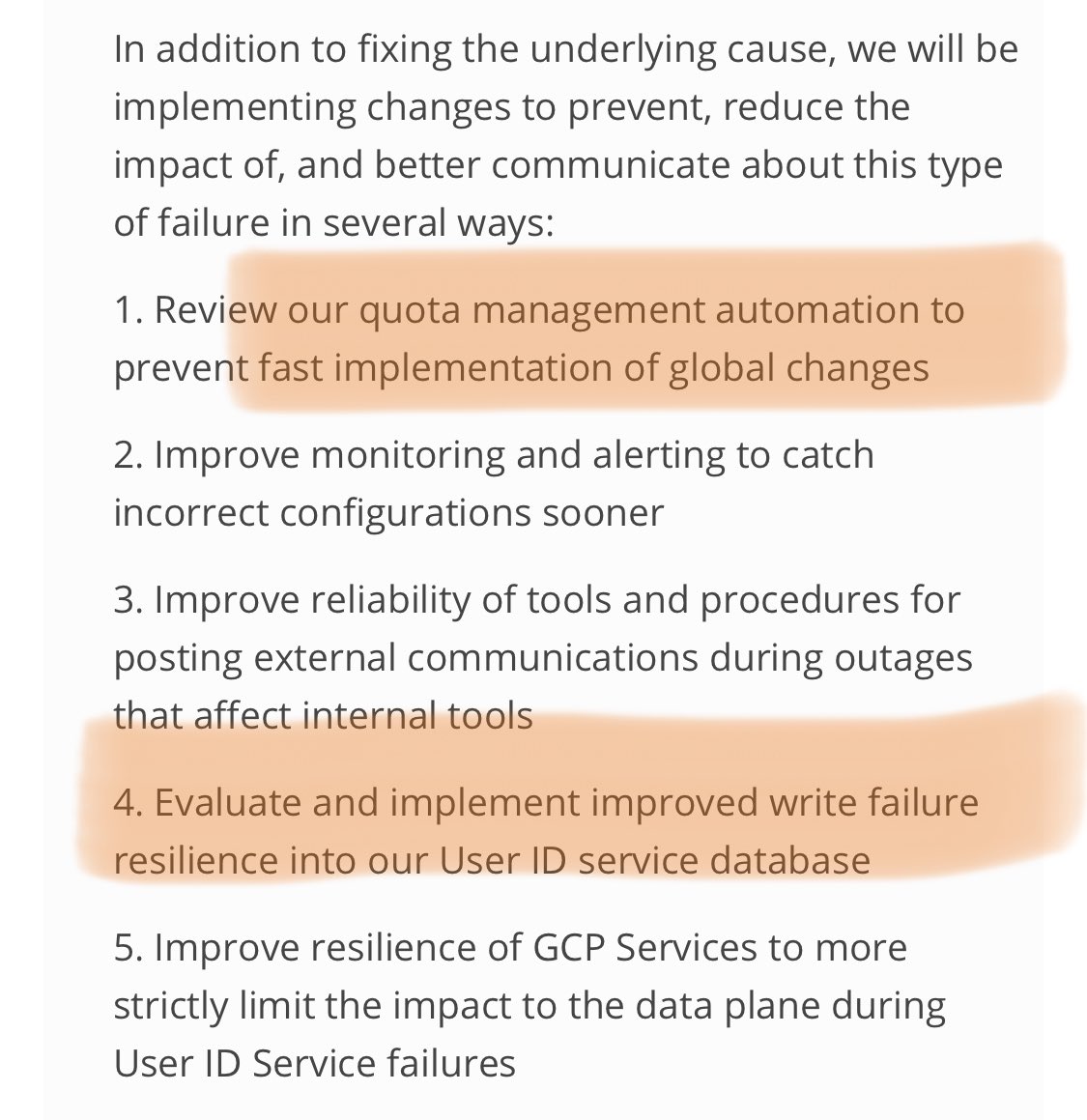

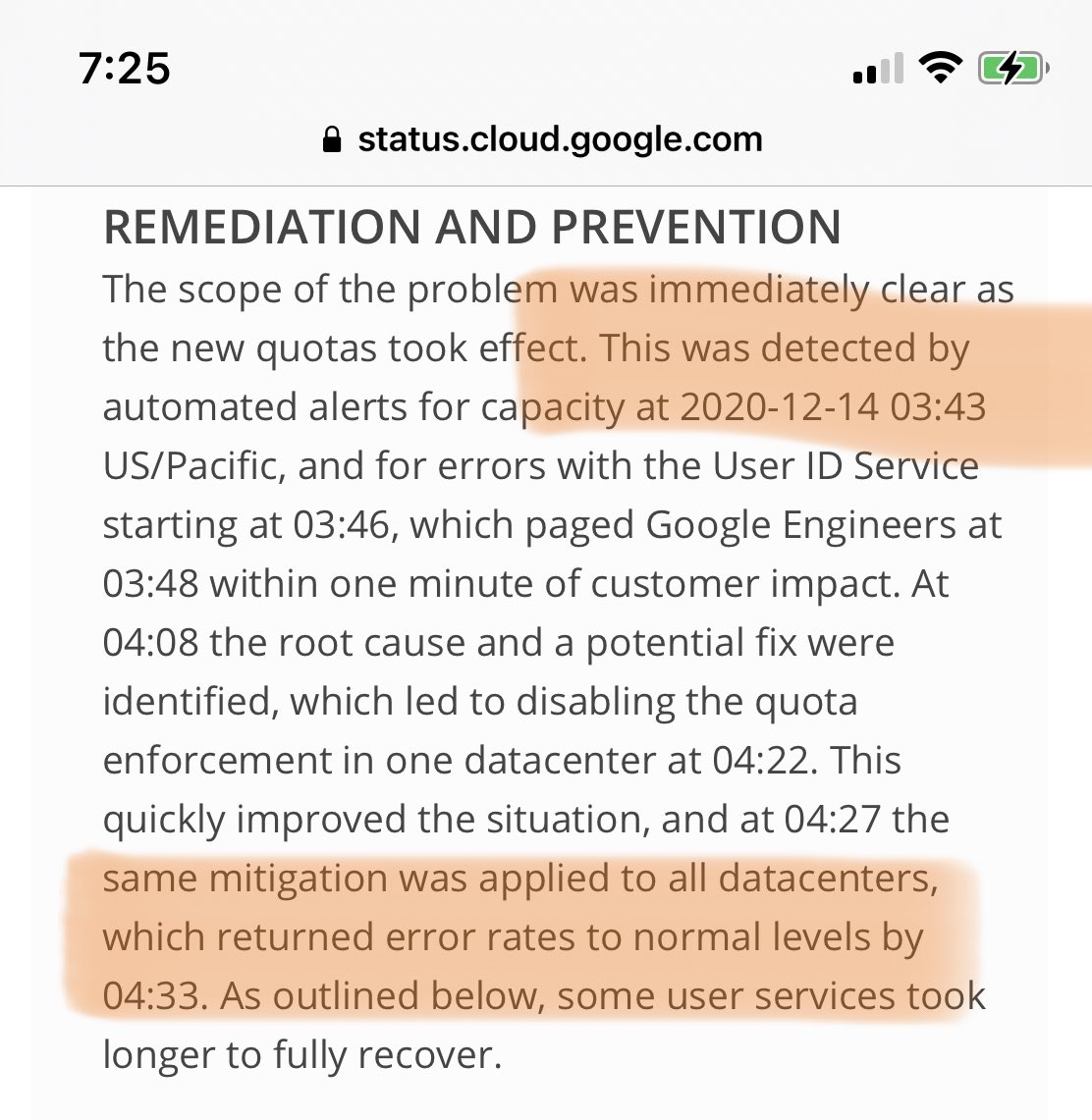

The full postmortem of the Google outage this week is now up: - incomplete migrations can be dangerous - it continues to baffle me that Google systems aren’t designed to minimize the blast radius. - Automated tools shouldn’t have the ability to make *global* config changes.Cindy Sridharan (@copyconstruct)Sat, 19 Dec 2020 03:42 GMT

Post details

Lot of armchair quarterbacking about the AWS/Cloudflare outages. I never comment on outages because, frankly, "the internet" is a Rube Goldberg machine, built on buckets of tears and non-obvious (and often non-technical) constraints. It's a miracle it works 1% as well as it does.

Matt Klein (@mattklein123)Sat, 28 Nov 2020 21:25 GMT

Post details

“... 50 years ago the conclusion "pilot error" as the main cause was virtually banned from accident investigation. The new mindset is that any system or procedure where a single human error can cause an incident is a broken system.” This is a great take re software outages 👏Ross Wilson (@rossalexwilson)Sun, 18 Oct 2020 11:20 +0000

Post details

It's funny looking at twitter and seeing the number of random companies reporting technical issues when there's an @awscloud outageKyle Arch (@KyleGeorgeArch)Tue, 25 Aug 2020 10:55 +0000

As I've said before, I'm a big fan of how Monzo handles their production incidents because it's quite polished and transparent

This is a really interesting read from Monzo about a recent incident they had. I really enjoy reading their incident management writeups because they show a tonne of detail, yet are stakeholder-friendly.

It's always interesting to see how other banks deal with issues like this, and what they would do to make things better next time.

You're currently viewing page 1 of 1, of 50 posts.